Embarking on the Data Science @ Command Line Challenge: Day 1 - Getting Started

Navigating Prerequisites, Setting Up Environments, and Unleashing the Power of Docker

Embarking on the Data Science @ Command Line journey is an exhilarating experience, and Day 1 sets the stage for a fascinating exploration. The initial steps involve downloading a curated dataset, creating a dedicated working directory, and unraveling the potential of WSL2. This blog post chronicles the enthralling start to the challenge, delving into the nuances of environment setup, Docker utilization, and the first encounter with the data landscape.

Download the dataset

To commence the Data Science @ Command Line Challenge, the initial imperative is acquiring the designated dataset. Navigate to https://www.datascienceatthecommandline.com/2e/data.zip and follow the systematic approach outlined in the blog. Establishing a well-organized working directory is pivotal for streamlined data handling. In my case, I opted for a directory named 'dsatcl' within the 'wq' folder situated in the home directory of my WSL2-Ubuntu-20.04 environment. The journey from the home directory to the working directory was facilitated by the command:

cd wd/dsatcl

Having reached the designated space, the dataset was acquired and unzipped with the following commands:

wget https://www.datascienceatthecommandline.com/2e/data.zip

unzip data.zip

cd data

Subsequently, I navigated into the 'data' directory, revealing a structured arrangement of folders corresponding to each chapter from 'ch02' to the final chapter.

Set up the environment with the command line

The crux of effective data science exploration lies in the seamless integration of the environment. This involves a series of steps elucidated in the blog, ensuring a harmonious collaboration between Docker and WSL2.

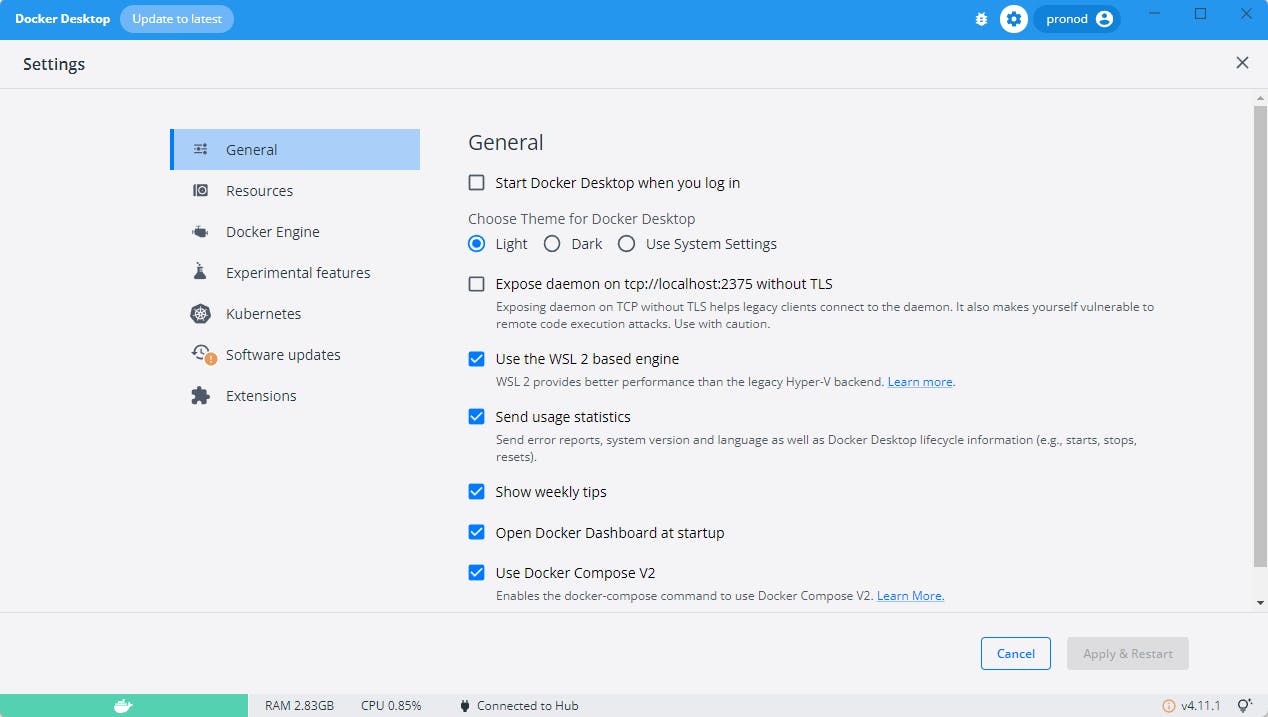

The initial phase necessitates Docker installation on the Windows system. Upon launching Docker for the first time, a secure login is performed. The subsequent steps, neatly elucidated on the interface, guide users through the configuration process. Crucial to this setup is the selection of "Use the WSL 2 based engine," ensuring compatibility and optimal performance within the WSL2 environment (see image below).

Further fine-tuning is accomplished through the 'Resources' section, where specific settings are adjusted to enable Docker within the WSL2 environment (See below image).

This strategic integration lays the groundwork for a seamless transition between the Windows system and the Ubuntu 20.04 environment within WSL2.

To complete the environment setup, the next step involves pulling the Docker container specifically crafted for the Data Science @ Command Line Challenge:

docker pull datasciencetoolbox/dsatcl2e

This container, tailored for Ubuntu 20.04, encompasses a toolkit featuring essential tools such as 'jq,' 'xmlstarlet,' 'GNU parallel,' 'xsv,' 'pup,' and 'vowpal wabbit.' Leveraging Packer, Ansible, and Docker, this toolbox project container encapsulates a comprehensive environment for efficient command-line-driven data science endeavors.

Following the successful download, the container is executed within the 'data' directory:

docker run --rm -it -v "$(pwd)":/data datasciencetoolbox/dsatcl2e

This command, with its array of options, orchestrates an interactive and dynamic interaction with the Docker container, facilitating the sharing of files and resources between the host machine and the container.

Here's a breakdown of each option:

--rm: This option automatically removes the container when it exits. It is useful for cleaning up temporary containers to save disk space.-it: These are two separate options:-istands for interactive mode, which allows you to interact with the container's standard input (stdin).-tallocates a pseudo-TTY, which makes the interaction with the container more like a terminal.

Together, -it is commonly used for running containers that require interactive input, like a command-line interface.

-v ${PWD}:/data: This option is used to mount a volume from the host machine into the container. It uses the syntax-v host_path:container_path. In this case:${PWD}is a shell variable representing the present working directory.:/dataspecifies that the current working directory on the host should be mounted into the/datadirectory in the container.

This is often used to share files between the host and the container, allowing data or code to be accessed and modified from both environments.

datasciencetoolbox/dsatcl2e: This is the name of the Docker image that will be used to create the container. Docker images are essentially snapshots of a file system with the necessary dependencies and configurations to run an application. In this case, the image isdatasciencetoolbox/dsatcl2e.

The journey culminates in a robust environment tailored for the intricacies of the Data Science @ Command Line Challenge.

Day 1 of the Data Science @ Command Line Challenge marks a crucial initiation into the world of command-line-driven data science. The establishment of a structured working directory, dataset exploration, and seamless integration of Docker for an enriched environment sets the stage for exciting future endeavors. Armed with foundational knowledge, the journey promises an immersive exploration of data science techniques, all orchestrated through the command line.

Stay Tuned for Day 2

Prepare for the next installment of our Data Science @ Command Line Challenge. Day 2 will uncover advanced techniques, tips, and tricks to elevate your command line data science skills. Whether you're a beginner or a seasoned practitioner, there's always something new to discover. Stay curious, stay engaged, and let the command line adventure continue!

Acknowledgments

A special thanks to the creators of the Data Science @ Command Line Challenge (me) and the Docker image (datasciencetoolbox/dsatcl2e). Your efforts have made the onboarding process smoother for aspiring data scientists like us.